The existing parsing engine of Spider had two major issues:

- Tcp sessions resources were including the list of packets they were built from. This was a limitation in the count of packets a tcp session could hold because the resource was ever increasing. And long persistent Tcp sessions were causing issues and thus were limited in terms of packets.

- Http parsing logs were including as well list of packets and of HTTP communications found.

I studied how to remove these limitations, and how to improve at the same time the parsing speed and its footprint. While keeping the same quality of course!

And I managed :) !!

I had to change part of architecture level 1 decisions I took at the beginning, and it had impacts on Whisperers code on 7 other micro services, but it seemed sound and the right decision!

4 weeks later, it is all done, full regression tested and deployed on Streetsmart! And the result is AWESOME :-)

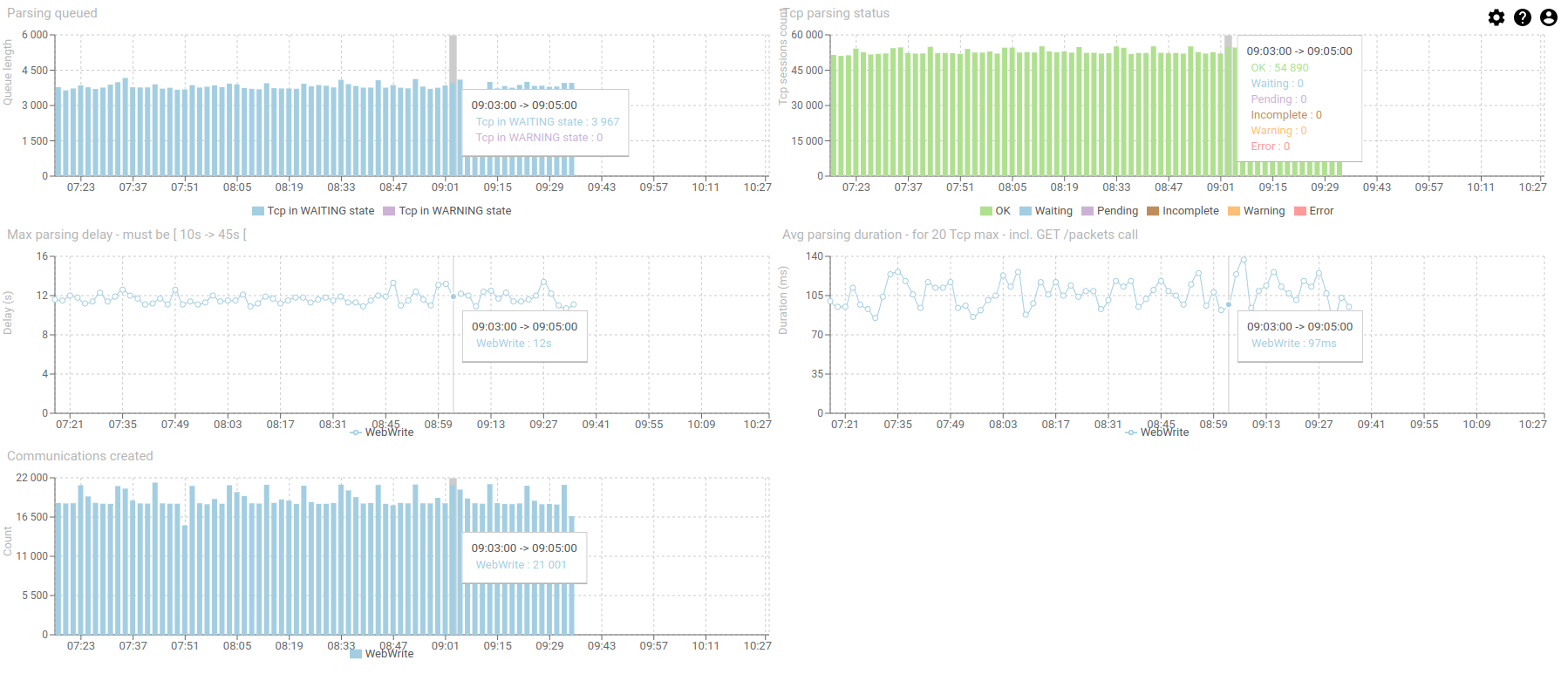

Spider now parses Tcp sessions in streaming, with a minimal footprint, and a reduced CPU usage of the servers for the same load ! :)

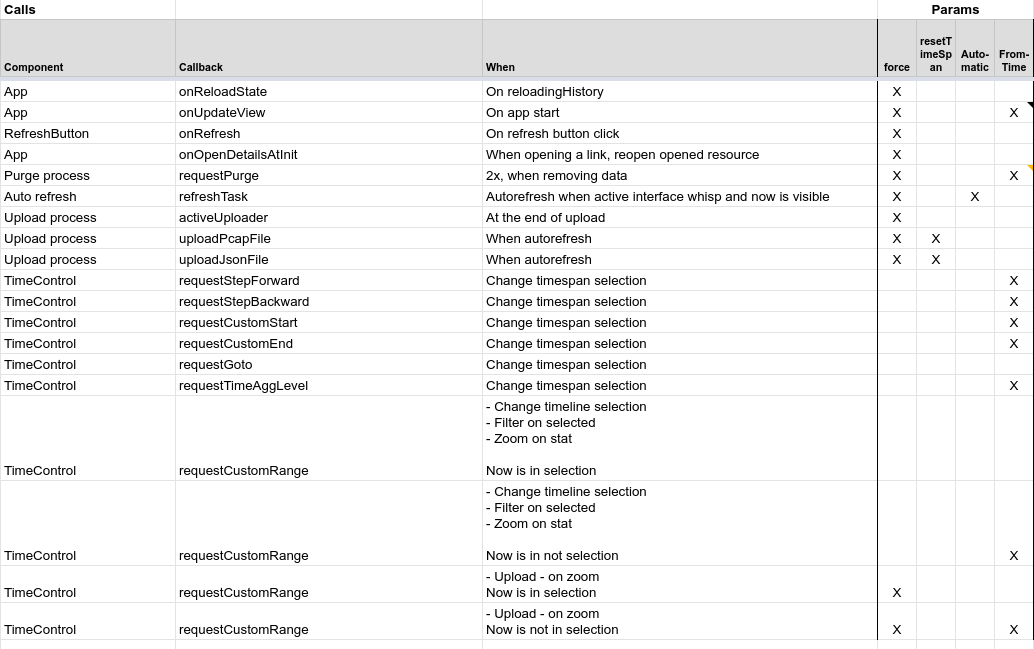

I also took the time to improve the 'understandability' of the process and the code quality. I will document the former soon.

Users... did not see any improvements (nor any issue), except that 'it seems faster', but figures are here to tell us!

The first day, I achieved 65 parsing errors out of 43 million communications! Those 2 missed bugs were solved straight away thanks to good observability! :)

3 /v2 APIs have been added to the API. Corresponding /v1 APIs will be deprecated soon.

But Spider is compatible with both, which allowed me an easy non regression by comparing parsing results of the same network communications... by both engines ;-) !

- Grids and details view of packets and Tcp sessions have been updated.

- Pcap upload feature has been updated to match new APIs.

- Downloading pcap packets has been fixed to match the new APIs.

- All this also implied changes in Tcp sessions display:

- Details and content details pages are now with infinite scroll as there may be tens or hundreds of thousands of packets.

- It deservers another improvement for later: to be able to select the time in the timeline!

- Getting all packets of a Tcp session is only a single filter away.

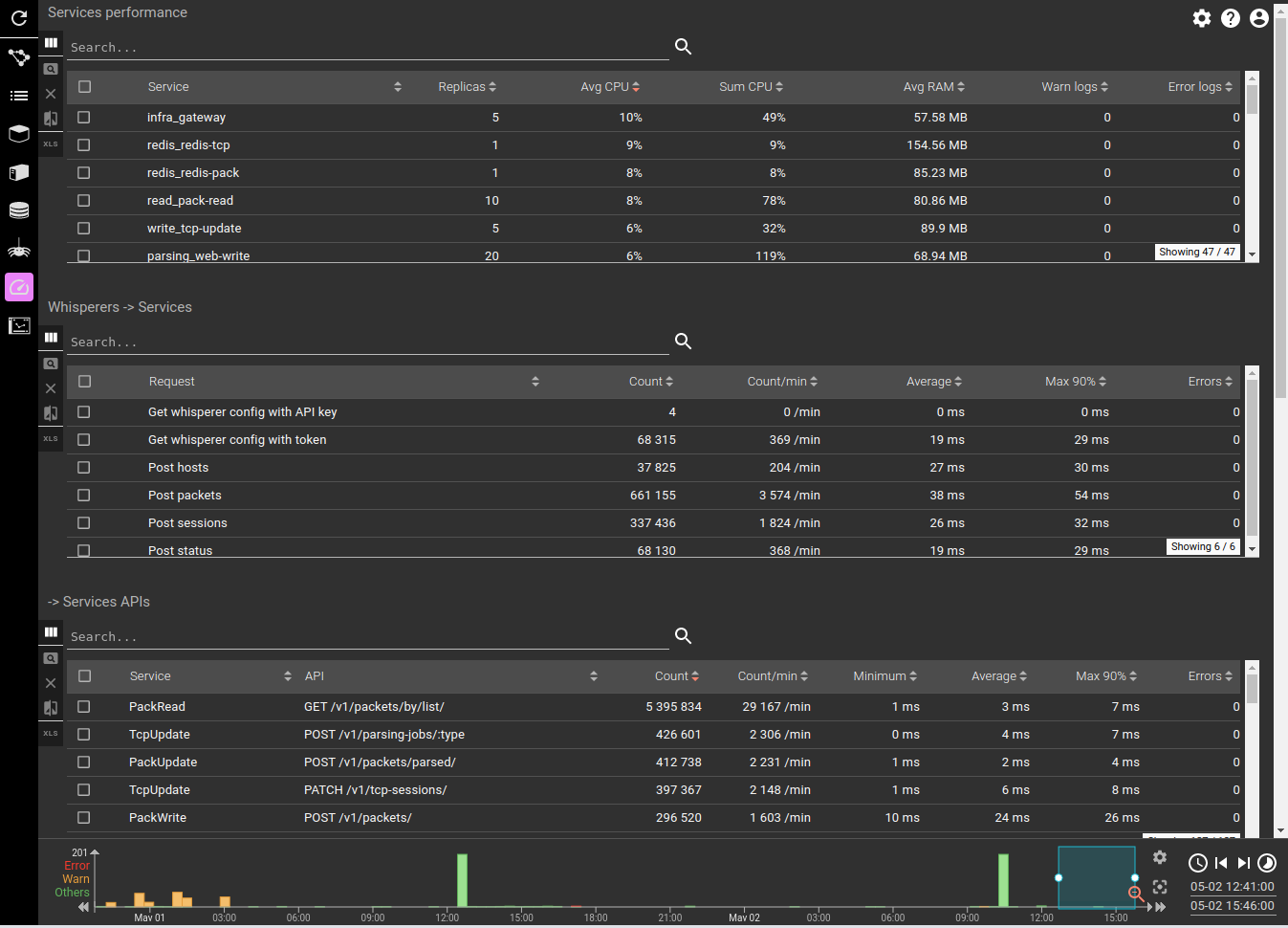

Last but not least... performance!! Give me the figures :)

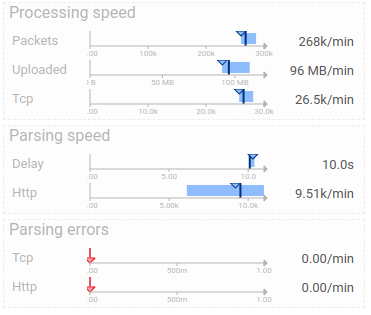

Statistics over

- 9h of run

- 123 MB /min of parsed data

- 318 000 packets /min

- 31 000 tcp sessions /min

- For a total of

- 171 Million packets

- 66,4 GB

- 16 Million Tcp sessions

- 0 error :-)

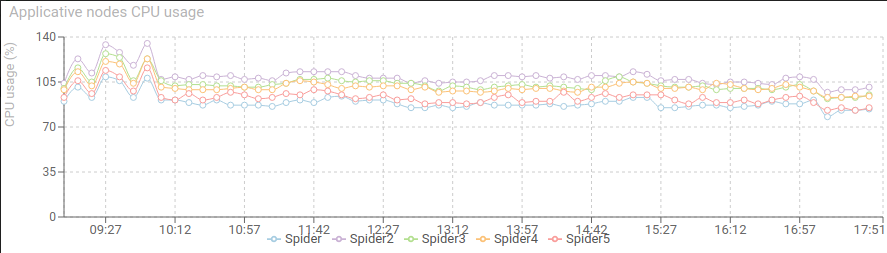

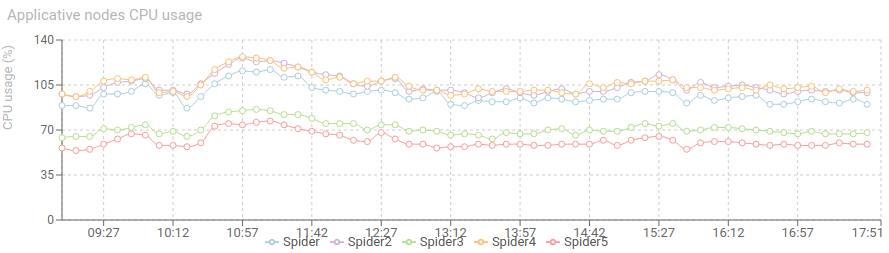

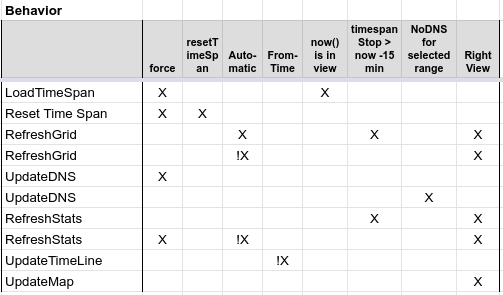

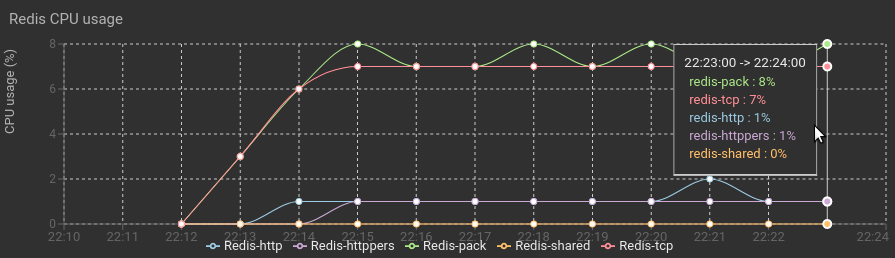

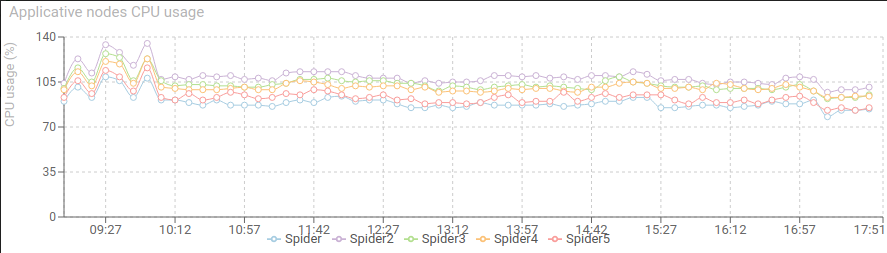

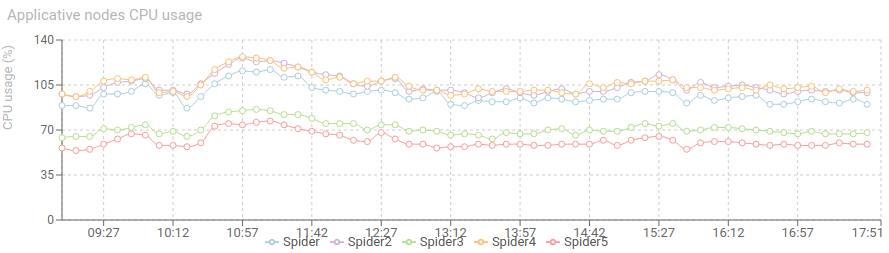

CPU usage dropped!

Before: After:

After:

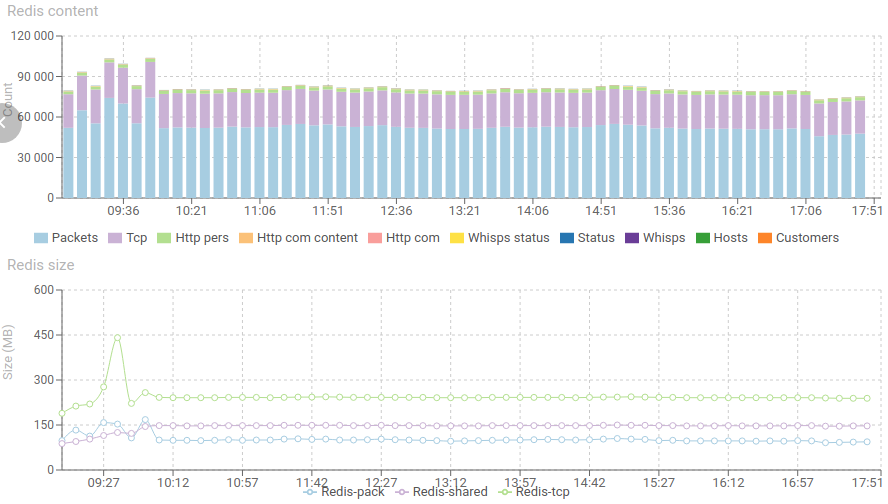

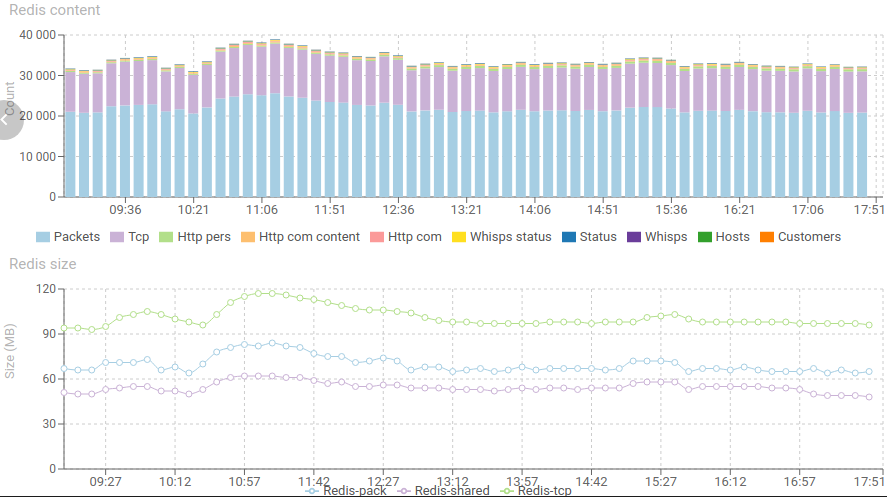

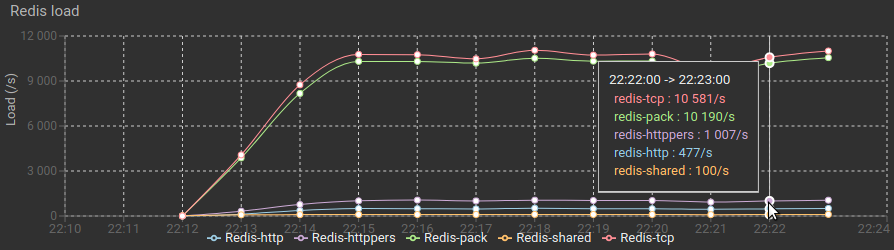

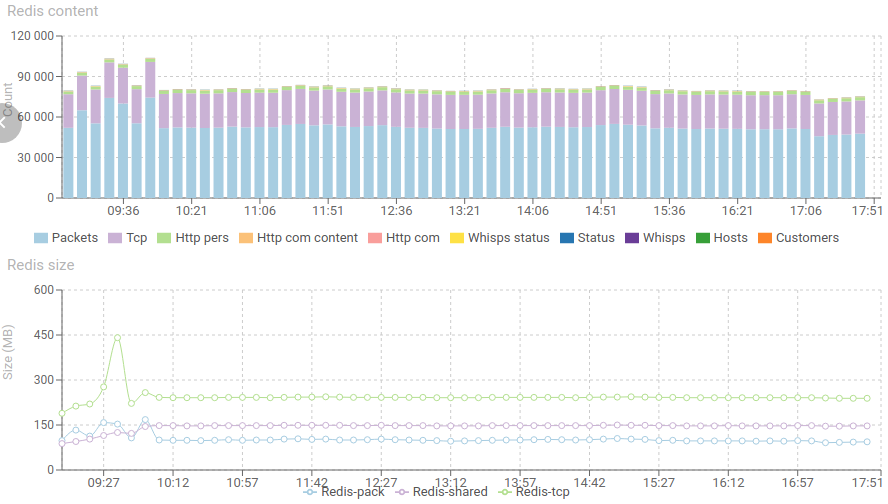

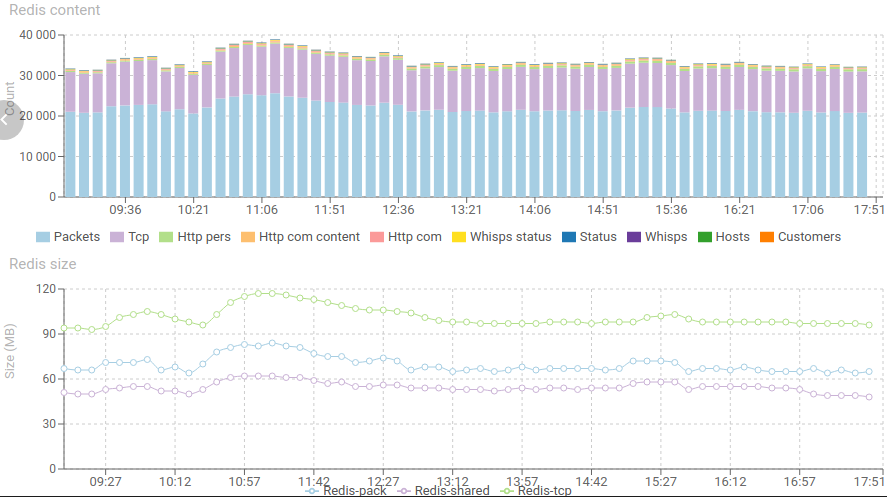

Redis footprint was divided by more than 2!

- From 80 000 items in working memory to 30 000

- From 500 MB to 200 MB memory footprint :)

Before: After:

After:

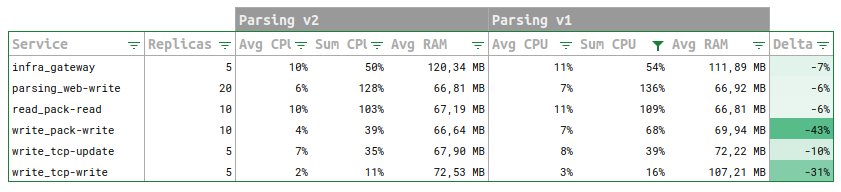

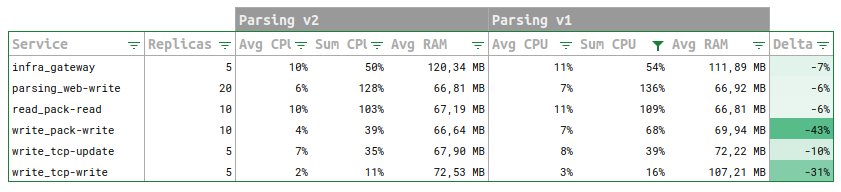

Resource usage

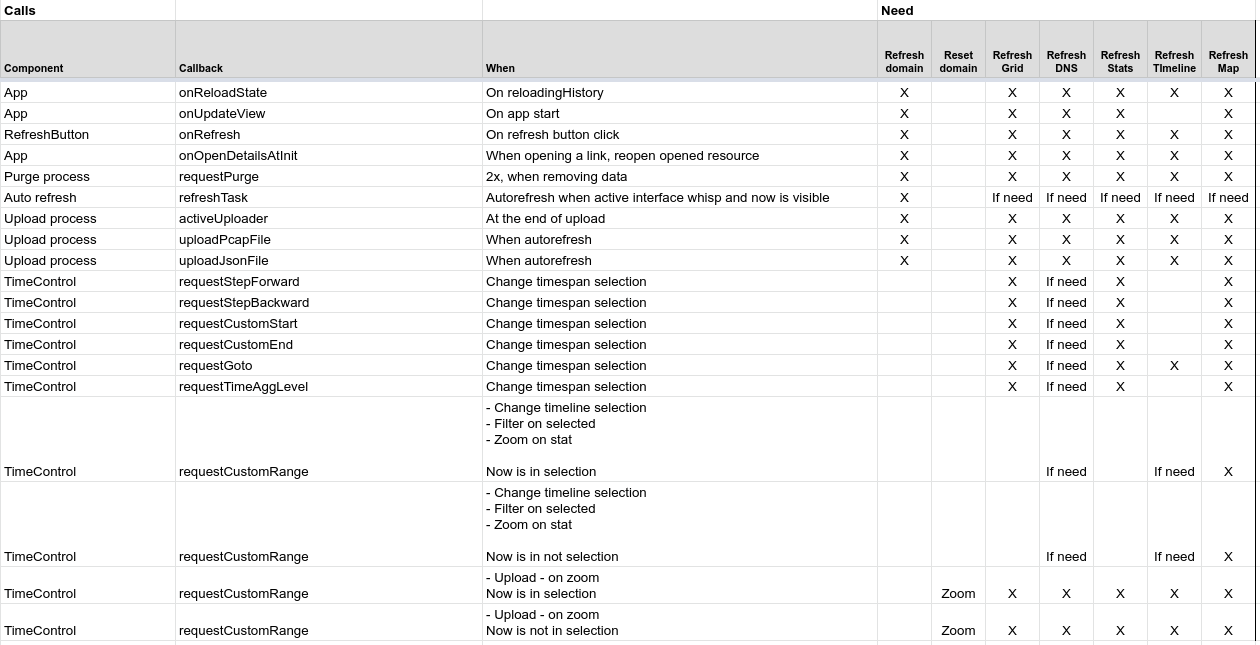

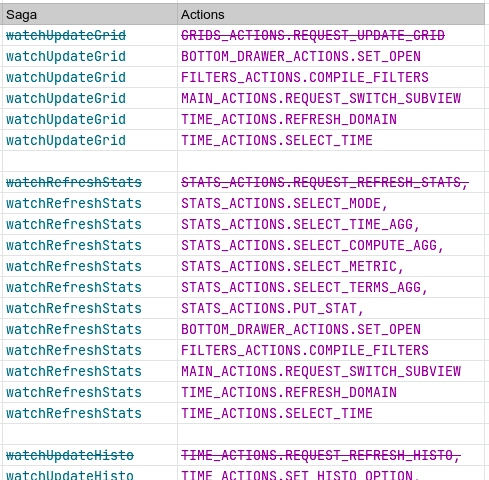

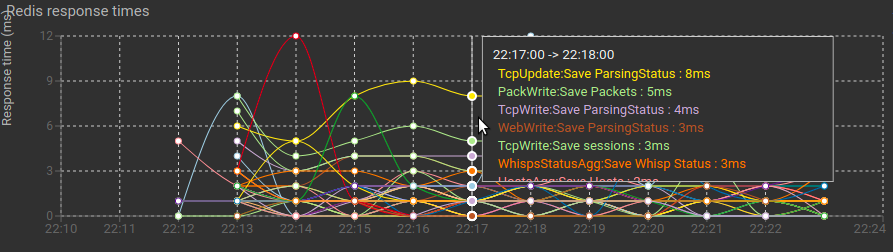

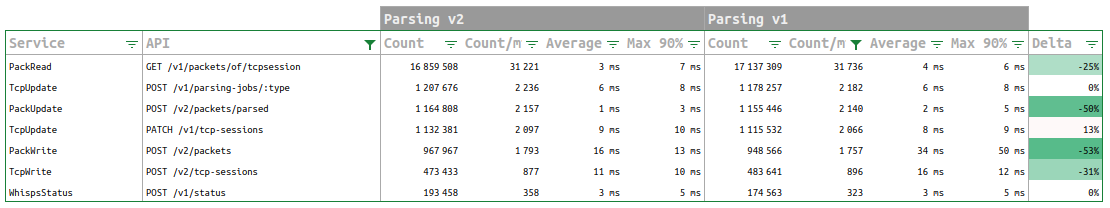

CPU usage for parsing service dropped of 6%. But the most impressive is the CPU drop of 31% and 43% of inbound services: pack-write and tcp-write.

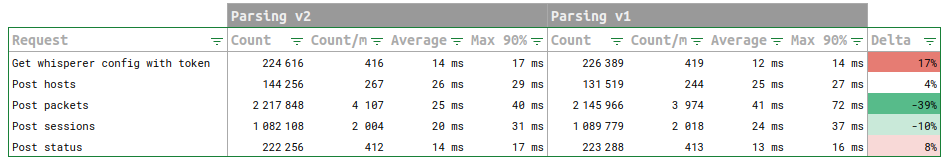

Whisperers --> Spider

Confirming the above figures, response time of pack-write and tcp-write as improved of 40% and 10%!

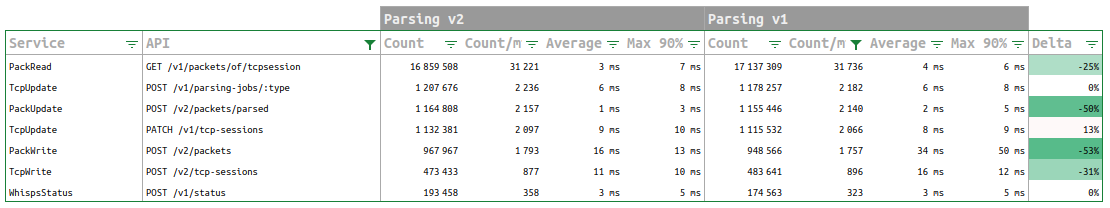

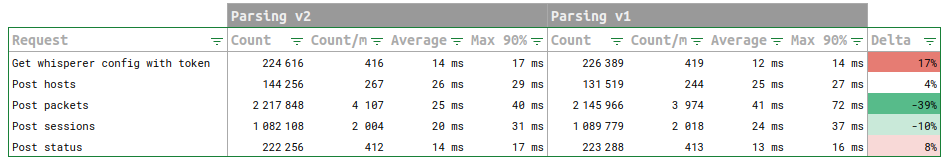

API stats

APIs statistics confirm the trend with service side improvements of up to 50%! Geez !!

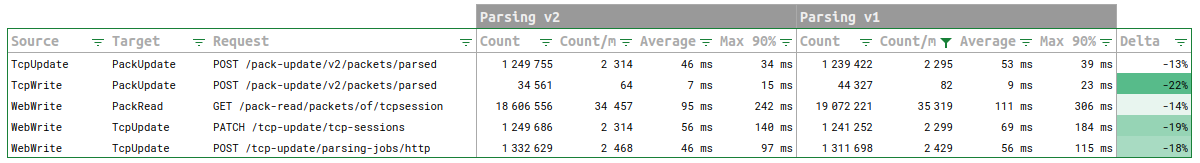

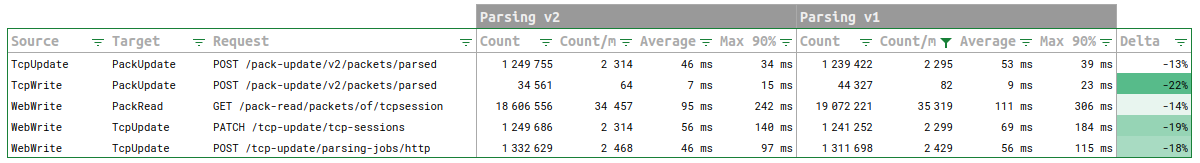

Circuit breakers stats

When seen from the circuit breakers perspective, the difference is smaller, due to delay in client service internal processing.

That was big work! Many changes in many places. By Spider is now faster and better than ever :)